Math Topics

Learning Support

Professional

This section on assessment is part of the Math Methodology series on

instruction, assessment, and curriculum design. The short essay that

follows, The Role of Assessment, is part 2 of the essay, Teaching

and Math Methodology, which includes:

This section on assessment is part of the Math Methodology series on

instruction, assessment, and curriculum design. The short essay that

follows, The Role of Assessment, is part 2 of the essay, Teaching

and Math Methodology, which includes:

Part 1: Math Methodology: Instruction

Part 2: Math Methodology: Assessment

Part 3: Curriculum: Content and Mapping

Assessment is more than collecting data on test performance. Although assessments have been used for accountability, they also function to inform instruction and student learning goals. Anne Davies (2004) indicated that assessment is a process of triangulation or gathering evidence over time that agreed-upon criteria have been met from multiple sources: artifacts that students produce, observation notes on the process of students' learning, and documentation from talking with students about their learning.

Assessment includes guiding students to self-assess their learning, involving parents and students in discussions of progress, and students showing evidence of their learning to audiences they care about. It is a complex process because of differences in learning styles, multiple intelligences, and the diverse backgrounds that students bring to classrooms (Davies, 2004).

Davies (2004) stated key tenets on the role of assessment, which illustrate the partnership that should exist between teaching and learning. "Keeping students informed about the learning objectives or standards they are working toward helps support their success. Quality and success also become clearer for students when we engage them in setting criteria" (p. 2).

There are many forms of assessment, and educators also need to know when to use or not use a particular method, and any precautions that might exist. They also need to consider the level of knowledge being assessed. For example, Norman Webb considered four levels in his Depth of Knowledge (DOK) Framework: recall, skills and concepts, strategic thinking and reasoning, and extended thinking (Webb, 2002; Hess, 2013). Per Hess (2013), the "intended DOK level can be assigned to anything from an instructional question, to broader course objectives and assessment items/tasks" (p. 5). She provided multiple examples of products and activities at each level, which also apply to Common Core standards. The following are among those:

Level 1: Recall and Reproduction. Key words include: "Locate, calculate, define, identify, list, label, match, measure, copy, memorize, repeat, report, recall, recite, recognize, state, tell, tabulate, use rules, answer who, what, when, where, why, how" (p. 6). For example, learners might:

- "Plot/locate points on a graph"

- "Represent math relationships in words, pictures, or symbols"

- "Measure, record data"

- "Use formulas"

- "Evaluate expressions" (p. 6).

Level 2: Skills and Concepts. Key words include "Infer, categorize, organize and display, compare-contrast, modify, predict, interpret, distinguish, estimate, extend patterns, interpret, use context clues, make observations, summarize, translate from table to graph, classify, show cause/ effect, relate, edit for clarity" (p. 10). For example, learners might:

- "Explain the meaning of a concept using words, objects, and/or visuals"

- Complete "Complex calculation tasks involving decision points (e.g., standard deviation)"

- Construct models

- "Conduct measurement or observational tasks that involve organizing the data collected into basic presentation forms such as a table, graph, Venn diagram, etc." (p. 11).

Level 3: Strategic Thinking and Reasoning. Key words include "Critique, appraise, revise for meaning, assess, investigate, cite evidence, test hypothesis, develop a logical argument, use concepts to solve non-routine problems, explain phenomena in terms of concepts, draw conclusions based on data" (p. 14). For example, learners might:

- "Analyze survey results"

- "Create complex graphs or databases where reasoning and approach to data organization is not obvious"

- "Explain and apply abstract terms and concepts to real-world situations"

- "Design, conduct, or critique an investigation to answer a research question"

- "Propose an alternate solution to a problem studied" (pp. 14-15).

Level 4: Extended Thinking. Key words include: Initiate, design and conduct, collaborate, research, synthesize, self-monitor, critique, produce/present" (p. 18). Learners might:

- "Relate mathematical or scientific concepts to other content areas, other domains, or other concepts"

- "Conduct a project that specifies a problem, identities solution paths, solves the problem, and reports results"

- "Design a mathematical model to inform and solve a practical or abstract situation" (p. 21).

Methods include case studies, collaborative/group projects, direct observation, essays, exams (unseen and seen/open-book), multiple choice tests, oral questioning after observation, performance projects, portfolios, practical projects, presentations, problem sheets, self-assessment, simulations (forms of games), and oral exams. With the current focus on assessment using standardized testing, educators might have overlooked performance assessments, which also provide evidence of what students can do. Such evidence of student artifacts gathered over time, as Davies (2004) noted, is clearly evident in Edutopia's video: Comprehensive Assessment: An Overview. It will definitely give you ideas for your own classroom.

Also consider the Kansas State Department of Education Assessment Literacy Project as a professional development resource. It includes 21 online modules with video lessons and activities appropriate for all educators. W. James Popham provided the introductions to these modules. Modules address four main questions:

The following sections address systems for assessment (diagnostic, formative, summative), interim assessments, more on self-assessment, teacher-made tests, and vendor-made tests.

In Test Better, Teach Better: The

Instructional Role of Assessment, Popham (2003) indicated that there are two kinds of tests that may or may not help a teacher to do a better

instructional job: teacher-made classroom tests and externally imposed tests,

which are "those tests required by state or district authorities and designed by

professional test developers to measure student mastery of the sets of

objectives experts have deemed essential" (Preface section).

In Test Better, Teach Better: The

Instructional Role of Assessment, Popham (2003) indicated that there are two kinds of tests that may or may not help a teacher to do a better

instructional job: teacher-made classroom tests and externally imposed tests,

which are "those tests required by state or district authorities and designed by

professional test developers to measure student mastery of the sets of

objectives experts have deemed essential" (Preface section).

The accountability movement has placed a great deal of stress upon teachers to prepare students for state standardized tests and even greater stress upon students to perform well on those tests, which were mandated by the No Child Left Behind legislation, and which continue under provisions of the Every Student Succeeds Act (ESSA). Assessments for math students are mandated for grades 3-8 and once in grades 9-12 (Moran, 2015; 114th Congress, 2015).

Performance assessment is on the rise. Performance assessments/tasks have been and continue to be developed by more than one organization as part of the implementation of the Common Core State Standards and an emphasis on college and career readiness. In fact, within Title 34, noted in the Code of Federal Regulations (2022), state responsibilities for assessment include to "Involve multiple up-to-date measures of student academic achievement, including measures that assess higher-order thinking skills - such as critical thinking, reasoning, analysis, complex problem solving, effective communication, and understanding of challenging content - as defined by the State. These measures may ... Be partially delivered in the form of portfolios, projects, or extended performance tasks" (section 200.2(b)(7)). Also see ESSA (114th Congress, 2015) section 1111(b)(2)(B)(vi).

W. James Popham (2007b) suggested that schools also need interim tests that they "can administer every few months to predict students' performances on upcoming accountability tests" (p. 80). Title 34 (Code of Federal Regulations, 2022) also addresses interim tests, and includes the provision that in lieu of a single summative assessment, states may implement "Multiple statewide interim assessments during the course of the academic year that result in a single summative score that provides valid, reliable, and transparent information on student achievement and, at the State's discretion, student growth" (section 200.2(b)(10)).

The Michigan Assessment Consortium provides resources on interim benchmark assessments including "definitions, quick primers, importance of identified purpose, [and] characteristics of measures to meet identified purposes." It reminds educators that "Interim [benchmark] assessments or (IBA’s) vary in purpose and use and therefore the characteristics or features incorporated in the design of these measures matters." Further, "A dozen different purposes have been identified for interim assessments (all legitimate) but more importantly few assessments can serve more than one or two purposes well."

As far back as 2001, ASCD (2021) has been supporting using multiple measures in assessment systems, rather than reliance on the outcome of a single test, to accurately measure achievement and to hold stakeholders accountable. Such assessment systems are

Fair, balanced, and grounded in the art and science of learning and teaching;

Reflective of curricular and developmental goals and representative of content that students have had an opportunity to learn;

Used to inform and improve instruction;

Designed to accommodate nonnative speakers and special needs students; and

Valid, reliable, and supported by professional, scientific, and ethical standards designed to fairly assess the unique and diverse abilities and knowledge base of all students (High Stakes Testing section, pp. 20-21).

A balanced assessment system combines formative, interim, and summative assessments, with the emphasis being on formative assessments. To create a balanced system, teachers need to consider two domains: the "standards-based core instruction domain that aligns to grade-level or advanced content" and the "intervention domain for students who are not yet achieving standards and need additional support" (Beard, Bruno, Mabry, et al., 2020, pp. 15-16).

Per Popham (2011), "In the U.S., what’s typically being described by the proponents of balanced assessment is the application of three distinctive measurement strategies: classroom assessments; interim assessments; and large-scale assessments" (p. 14). Of the three types, classroom assessments and large-scale assessments are supported by strong evidence. However, "interim assessments are neither supported by research evidence, nor are they regarded by the public or policy makers as being of particular merit" (p. 15).

Popham (2011) noted that interim assessments are also standardized tests that are "usually purchased from commercial vendors, but are sometimes created locally" (p. 14). The chief support for their use in balanced assessment "comes from the vendors who sell them" (p. 15). They can be used in the following ways:

The problem with their use is a potential unwanted or undesirable level of regimentation to instruction. For results of interim tests to be useful, "the timing of a teacher's instruction must mesh with what's covered in a given interim test" (p. 15).

Per Robert Slavin (2019), "Benchmark assessments are only useful if they improve scores on state accountability tests." However, the bad news is that "Research finds that benchmark assessments do not make any difference in achievement." This perspective is supported by studies that included benchmark assessments. A summary of findings for 6 elementary reading studies and 4 elementary math studies indicated mean effect sizes on achievement as essentially zero.

Slavin (2019) suggested possible reasons as to why benchmark assessments do not make a difference:

For those reasons, Slavin (2019) suggested schools can save a lot of time and money by eliminating benchmark assessments. "Yes, teachers need to know what students are learning and what is needed to improve it, but they have available many more tools that are far more sensitive, useful, timely, and tied to actions teachers can take."

Slavin's is only one perspective, however. Joan Herman and Eva Baker (2005) stated a similar perspective as that from Slavin in that "There is little sense in spending the time and money for elaborate testing systems if the tests do not yield accurate, useful information" (para. 3). To this end, their six criteria, noted in Making Benchmark Testing Work, provide guidelines that educators can use to develop, select, or purchase benchmark tests, which would make them work. Those criteria referred to alignment, diagnostic value, fairness, technical quality, utility, and feasibility. Systematic design and continual evaluation of outcomes are key.

Paul Black and Dylan Wiliam (1998) stated from the TIMSS video study, "A focus on standards and accountability that ignores the processes of teaching and learning in classrooms will not provide the direction that teachers need in their quest to improve" (para. 2). Those processes involve teachers making assessment decisions, which Popham (2003) indicated can be made based on the structure of the tests themselves or on students' performance on those tests. Teachers can make decisions about the nature and purpose of the curriculum, about students' prior knowledge, about how long to teach something, and about the effectiveness of instruction (Chapter 1, What Sorts of Teaching Decisions Can Tests Help? section).

Sometimes state and district content standards are not worded clearly enough for use at the classroom level and lead to possible multiple interpretations. Hence, Popham (2003, Chapter 1) pointed out the need for teachers to examine test sample items to clarify the intent of a particular curricular goal. They can then focus instruction appropriately on that intent.

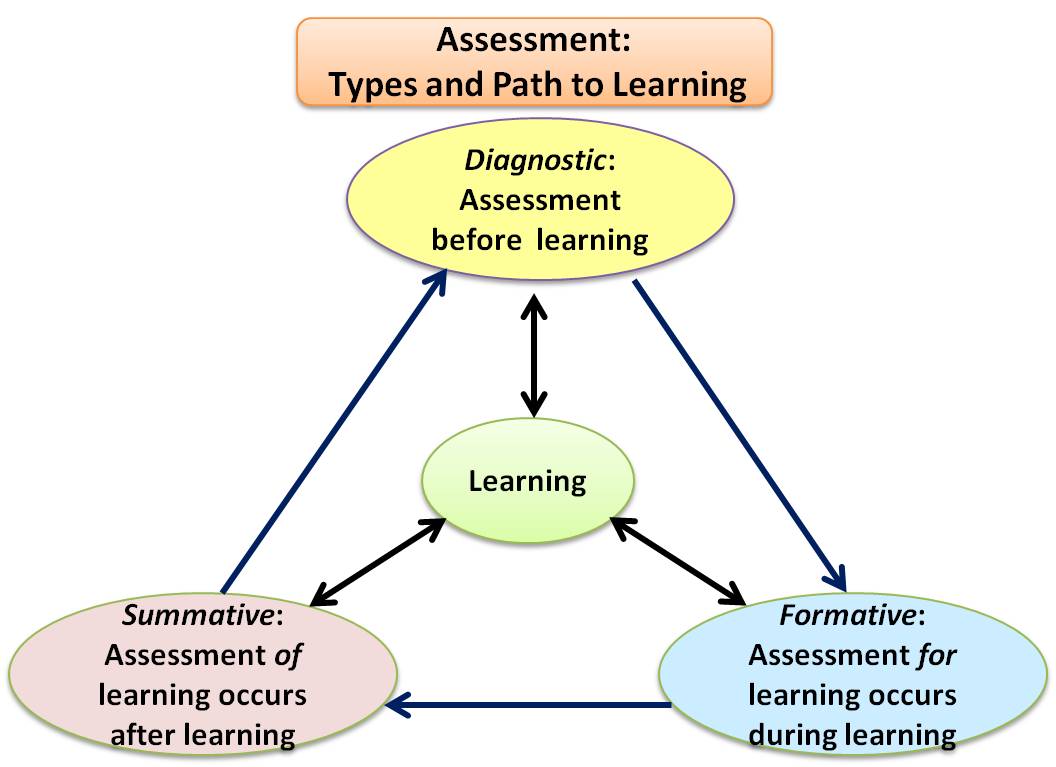

How teachers use assessment plays a major role in achieving

standards. Assessments can be diagnostic, formative, and summative. As you

read about those categories in what follows, consider the assessment and

grading practices for effective learning suggested by Jay McTighe and Ken

O'Connor (2006):

How teachers use assessment plays a major role in achieving

standards. Assessments can be diagnostic, formative, and summative. As you

read about those categories in what follows, consider the assessment and

grading practices for effective learning suggested by Jay McTighe and Ken

O'Connor (2006):

Use summative assessments to frame meaningful performance goals. ... To avoid the danger of viewing the standards and benchmarks as inert content to “cover,” educators should frame the standards and benchmarks in terms of desired performances and ensure that the performances are as authentic as possible. Present those tasks at the beginning of a new unit.

Show criteria and models in advance. Rubrics and multiple models showing both strong and weak work help learners judge their own performances.

Assess before teaching.

Offer appropriate choices. While keeping goals in mind, options judiciously offered enable students different opportunities for best demonstrating their learning.

Provide feedback early and often. Learners will benefit from opportunities to act on the feedback—to refine, revise, practice, and retry.

Encourage self-assessment and goal setting.

Allow new evidence of achievement to replace old evidence. (pp. 13-19)

McTighe (2018) offered additional assessment practices that enhance learning. Learners benefit from assessments that are "open," which means "there isn't a single correct answer or a single way of accomplishing the task." "There is an audience other than the teacher" when students "produce tangible products and/or performances to show evidence of their learning." They have "opportunities to work with others (collaboration)." The role of the teacher is that of a coach (p. 20).

Consider motivation. Also consider the role of motivation in assessments you provide. Richard Curwin (2014) defined educational motivation as "the desire to learn" and believes that "the evaluation process is one of the most formidable killers of motivation in education. Rewards, punishment, incentives, threats, or any external strategy might get students to do their work, but they rarely influence whether children want to learn. These externals create finishers, not learners" (p. 38). He believes effort should be part of the grading process and that there are legitimate ways of evaluating effort without lowering standards. For example, count improvement, count seeking help, count offers to help other students, and count extra work. He offered the following evaluation strategies to help encourage students to try harder and increase their internal motivation:

Consider Grading Practices. Per Susan Brookhart, Thomas Guskey, Jay McTighe, and Dylan Wiliam (2020), "Grading should be a part of a comprehensive, balanced assessment system." Poor grading practices can actually harm learners. They offered eight principles for improviing grading:

Diagnostic

assessments, typically given at the beginning of an instructional

unit or school year, will determine students' prior knowledge, strengths,

weaknesses, and skill level. They help educators to adjust

curriculum or provide for remediation. The National Center on Intensive

Intervention at American Institutes for Research includes an

Academic Screening

Tools Chart to help select tools to identify students who might have a math

difficulty and need an intervention. It's searchable by subject (reading

and math) and grade band.

Diagnostic

assessments, typically given at the beginning of an instructional

unit or school year, will determine students' prior knowledge, strengths,

weaknesses, and skill level. They help educators to adjust

curriculum or provide for remediation. The National Center on Intensive

Intervention at American Institutes for Research includes an

Academic Screening

Tools Chart to help select tools to identify students who might have a math

difficulty and need an intervention. It's searchable by subject (reading

and math) and grade band.

According to Tomlinson and McTighe

(2006) in Integrating Differentiated Instruction

and Understanding by Design, diagnostic assessments can also help "identify misconceptions, interests, or learning

style preferences," and help with planning for differentiated instruction.

Assessments might take the forms of "skill-checks, knowledge surveys, nongraded

pre-tests, interest or learning preference checks, and checks for

misconceptions" (p. 71).

According to Tomlinson and McTighe

(2006) in Integrating Differentiated Instruction

and Understanding by Design, diagnostic assessments can also help "identify misconceptions, interests, or learning

style preferences," and help with planning for differentiated instruction.

Assessments might take the forms of "skill-checks, knowledge surveys, nongraded

pre-tests, interest or learning preference checks, and checks for

misconceptions" (p. 71).

Thus, pretests help "to isolate the things your new students already know as well as the things you will need to teach them" (Popham, 2003, Chapter 1, Using Tests to Determine Students' Entry Status section). Further, "A pretest/post-test evaluative approach ... can contribute meaningfully to how teachers determine their own instructional impact" (Chapter 1, Using Tests to Determine the Effectiveness of Instruction section).

The point of a diagnostic is not just to assess, but to do something with test results leading to improved learning. Thus, progress monitoring with individual students or an entire class makes sense. According to the National Center on Student Progress Monitoring (NCSPM), progress monitoring is "a scientifically based practice." The term is relatively new, and educators might be more familiar with Curriculum-Based Measurement and Curriculum-Based Assessment. An implementation involves determining a student’s current levels of performance and setting goals for learning that will take place over time. "The student’s academic performance is measured on a regular basis (weekly or monthly). Progress toward meeting the student’s goals is measured by comparing expected and actual rates of learning. Based on these measurements, teaching is adjusted as needed. Thus, the student’s progression of achievement is monitored and instructional techniques are adjusted to meet the individual students learning needs" (NCSPM, n.d., Common Questions section).

Progress monitoring is one component of Response to Intervention (RTI), which is an education model for early identification of students at risk for learning disabilities. Of equal importance is the emphasis on providing appropriate learning experiences for all students by ensuring current levels of skill and ability are aligned with the instructional and curricular choices provided within their classroom (RTI Action Network, n.d., What is RTI? section). The RTI Action Network provides extensive information on RTI and the how to's of progress monitoring.

The National Center on Intensive Intervention at American Institutes for Research has identified Tools for Academic Progress Monitoring for math, reading, and writing that demonstrate sufficient evidence for meeting progress monitoring standards. These are representative of progress monitoring products.

Formative assessment is assessment for learning of current

students. It "is an essential component of classroom work and its development can

raise standards of achievement” (Black & Wiliam, 1998, Are We Serious About Standards?

section, para. 2). It "has three key elements: 1) eliciting evidence about learning to

close the gap between current and desired performance; 2) providing feedback to

students; and 3) involving students in the assessment and learning process"

(Heritage, 2008, p. 6). It's important to note that there "is no single

way to collect formative evidence because formative assessment is not a specific

kind of test. For example, teachers can gather evidence through

interactions with students, observations of their tasks and activities, or

analysis of their work products" (Heritage, 2011, p. 18).

Formative assessment is assessment for learning of current

students. It "is an essential component of classroom work and its development can

raise standards of achievement” (Black & Wiliam, 1998, Are We Serious About Standards?

section, para. 2). It "has three key elements: 1) eliciting evidence about learning to

close the gap between current and desired performance; 2) providing feedback to

students; and 3) involving students in the assessment and learning process"

(Heritage, 2008, p. 6). It's important to note that there "is no single

way to collect formative evidence because formative assessment is not a specific

kind of test. For example, teachers can gather evidence through

interactions with students, observations of their tasks and activities, or

analysis of their work products" (Heritage, 2011, p. 18).

In

Advancing Formative Assessment in Every Classroom: A Guide for Instructional

Leaders, Moss and Brookhart (2019) provided additional details, stating that formative assessment, unlike summative assessment, is an

ongoing fluid process influenced by student needs and teacher feedback. It

follows a continuous improvement model (p. 7) and involves six interrelated elements:

In

Advancing Formative Assessment in Every Classroom: A Guide for Instructional

Leaders, Moss and Brookhart (2019) provided additional details, stating that formative assessment, unlike summative assessment, is an

ongoing fluid process influenced by student needs and teacher feedback. It

follows a continuous improvement model (p. 7) and involves six interrelated elements:

- Shared learning targets and criteria for success

- Feedback that feeds learning forward

- Student self-assessment and peer assessment

- Student goal setting

- Strategic teacher questioning

- Student engagement in asking effective questions (pp. 5-6).

NWEA has a series of Formative Conversation Starters for grades 2-8. Each includes "questioning strategies and practical tips for using guided conversations to uncover how [students] think and communicate about mathematical concepts." "Teachers may wish to use these conversation starters in one-on-one conferences with students or in small groups. Formative Conversation Starters approach student knowledge by presenting a single standards-based assessment item and leveraging the item to elicit conversation through clustered questioning" (p. 3 in each starter).

Such assessments provide immediate evidence of student learning, and can be used to help improve upon quality of instruction and to monitor progress in achieving learning outcomes. However, for teachers to design an appropriate "optimally effective" formative assessment, they should have knowledge of an associated learning progression. "A learning progression is a carefully sequenced set of building blocks that students must master en route to mastering a more distant curricular aim. These building blocks consist of subskills and bodies of enabling knowledge" (Popham, 2007a, online para. 2), which are typically constructed using backward analysis.

Popham (2007a) pointed out important issues related to developing learning progressions. It's comforting to know that "with few exceptions, there is no single, universally accepted and absolutely correct learning progression underlying any given high-level curricular aim." Following less-is-more advice, "learning progressions should contain only those subskills and bodies of enabling knowledge that represent the most significant building blocks." However, isolating those building blocks takes time and "requires rigorous cerebral effort."

See Progressions for the Common Core State Standards for Mathematics, the final version by the Common Core Writing Team led by Bill McCallum,(2023) posted at Mathematical Musings. The document describes the progression of topics within strands across K-8 and high school.

For formative assessments to have the most impact on learning, Tim Westerberg (2015) advised educators to keep the following five principles in mind when designing classroom assessments:

Per Moss and Brookhart (2009) when used appropriately, formative assessment is also strongly linked to increasing intrinsic student motivation to learn. Motivation to learn has four components: self-efficacy (one's belief in ability to succeed), self-regulation, self-assessment, and self-attribution (one's perceptions and explanations of success or failure that influence the amount of effort that an individual will put into an activity in the future) (chapter 1).

"Formative assessment includes both formal and informal methods, such as ungraded quizzes, oral questioning, observations, draft work, think-alouds, student constructed concept maps, dress rehearsals, peer response groups, and portfolio reviews" (Tomlinson & McTighe, 2006, p. 71). A formative assessment serves as a progress monitor and indication of what adjustments to instruction that an educator might need to make.

At the beginning of a lesson or unit, formative assessment might take the form of "entrance tickets, K-W-L chart activities, Venn diagrams, think-pair-share" and so on (Beard, Bruno, Mabry, et al., 2020, p. 17). It might also take the form of Classroom Challenges, such as those provided by the Mathematics Assessment Project (MAP). These challenges are lessons that support teachers in formative assessment. Per the description, MAP provides "100 lessons in total, 20 at each grade from 6 to 8 and 40 for ‘Career and College Readiness’ at High School Grades 9 and above. Some lessons are focused on developing math concepts, others on solving non-routine problems."

Catlin Tucker (2021) noted the following strategies for assessing prior knowledge:

For progress monitoring and checking for understanding, Tucker (2021) suggested using quick polls, tell me how activities, giving students a problem to analyze for errors either with a partner or in small groups. The tell me how activities involve determining students' thinking as they explain how they would solve a problem, task, or question posed. Teachers can also use 3-2-1 exit tickets in which students write down "three things they learned, two connections they made, and one question they have." Thomas (2019) suggested sorting the exit tickets into three stacks as a quick way to get the big picture on understanding: those who got it, those who sort of got it, and those who did not get it. The size of the stacks will give an indication of what to do next. Teachers can use Google Classroom's Question tool to post short-answer or multiple-choice questions. After a question is posted, the tool "can track the number of students who responded. You can also draft questions to post later and post a question to individual students."

However, the California Department of Education (2014) cautions: "Not every form of assessment is appropriate for every student or every topic area, so a variety of assessment types need to be provided for formative assessment. These could include (but are not limited to) graphic organizers, student observation, student interviews, journals and learning logs, exit ticket activities, mathematics portfolios, self and peer-evaluations, short tests and quizzes, and performance tasks" (p. 85).

To illustrate, students might write their understanding of vocabulary or concepts before and after instruction, or summarize the main ideas they've taken away from a lecture, discussion, or assigned reading. They can complete a few problems or questions at the end of instruction and check answers. Teachers can interview students individually or in groups about their thinking as they solve problems, or assign brief, in-class writing assignments (Boston, 2002, Examples of Formative Assessment section).

These writing assignments, accompanied by peer group discussions, are essential, as "Knowledge and thinking must go hand in hand" (McConachie et al., 2006, p. 8). Embedding writing in performance tasks enables teachers to "guide students to deeper levels of understanding" (p. 12). McConachie et al. provided the following example, appropriate for a grade 7 math unit on percents:

To celebrate your election to the student council, your grandparents take you shopping. You have a 20-percent-off coupon. The cashier takes 20 percent off the $68.79 bill. Your grandmother remembers that she has an additional coupon for 10 percent off. The cashier takes the 10 percent off what the case register shows. Does this result in the same amount as 30 percent off the original bill? Explain why or why not? (p. 12)

In determining if students truly understand percents in the above example, teachers are assessing if students know what a percent is, if they can use percents in a real-world application, and interpret their answers appropriately.

The

RAFT method is a particularly useful formative assessment

writing strategy for checking understanding. According to Douglas Fisher

and Nancy Frey (2014) in their Checking for Understanding: Formative Assessment Techniques for Your Classroom, 2nd edition, RAFT prompts ask students to consider the role of the

writer (R), the audience (A) to whom the response is written, the format (F) of

the writing, and the topic (F) of the writing. For example, to determine if

students understand characteristics of triangles, one such prompt might be:

The

RAFT method is a particularly useful formative assessment

writing strategy for checking understanding. According to Douglas Fisher

and Nancy Frey (2014) in their Checking for Understanding: Formative Assessment Techniques for Your Classroom, 2nd edition, RAFT prompts ask students to consider the role of the

writer (R), the audience (A) to whom the response is written, the format (F) of

the writing, and the topic (F) of the writing. For example, to determine if

students understand characteristics of triangles, one such prompt might be:

R: Scalene triangle

A: Your angles

F: Text message

T: Our unequal relationship (pp. 72-73).

The RAFT Model in the following table includes other examples:

| RAFT Examples | |||

| Role | Audience | Format | Topic |

| Zero | Whole Numbers | Campaign speech | Importance of 0 |

| Scale factor | Architect | Directions for a blueprint | Scale drawings |

| Repeating decimal | Customers | Petition | Proof/ check for set membership |

| Prime number | Rational numbers | Instructions | Divisibility Rules |

| Function | Relations | Article | Argue the importance of functions |

| Exponent | Jury | Instructions to the jury | Laws of exponents |

| Variable | Equations | Letter | Role of variables |

| Acute triangle | Obtuse triangle | Letter | Explain the differences of the triangles |

Homework is one method for students to take responsibility for their learning. It also falls into the category of formative assessment, as it "typically supports learning in one of four ways: pre-learning, checking for understanding, practice, and processing" (Vatterott, 2009, p. 96). However, educators have varying opinions on homework ranging from how much to assign to what kind (e.g., acquisition or reinforcement of facts, principles, concepts, attitudes, or skills), for whom, when to assign it, and whether or not it should be graded.

Not all of teachers' homework practices are grounded by research. Per Cathy Vatterott (2009):

Viewing homework as formative feedback changes our perspective on the grading of homework. Grading becomes not only unnecessary for feedback, but possibly even detrimental to the student's continued motivation to learn. With this new perspective, incomplete homework is not punished with failing grades but is viewed as a symptom of a learning problem that requires investigation, diagnosis, and support. (p. 124)

Teachers cling to grading homework for a variety of reasons. Vatterott (2011) noted teachers fear that if they do not grade homework, students will not do it; they believe students' hard work should be rewarded, and homework grades help students who test poorly (p. 61). The issue is that homework should still be assessed because students need feedback on their learning; but if homework is assigned for learning purposes, then teachers need to rethink when homework should be counted as part of a grade.

Vatterott (2011) also proposed that homework should be separated into two categories: formative and summative. Homework that is formative should not be factored into the overall course grade. Practice with math problems would fall into the formative category. Homework assignments as summative assessments, such as research papers or portfolios of student work, may be considered. If teachers wish to tie homework to assessments, the easiest way "in students' minds is to allow them to use homework assignments and notes when taking a test. Another method is to correlate the amount of homework completed with test scores" perhaps by placing two numbers on a test paper--the test score itself and "the student's number of missing homework assignments" (p. 63). If teachers allow retakes for failing summative assessments, they might also require learners who "don't complete a set of homework assignments and then fail the related summative assessment" to "go back and complete all the formative tasks before they can retake the assessment" (p. 64).

So how much homework should be assigned? As noted, guidelines vary. Guidelines for an appropriate total amount of homework each day in consideration of all subjects are provided in Helping Your Students with Homework: A Guide for Teachers (Paulu, 2003) from the U.S. Department of Education. Per Paulu:

Educators believe that homework is most effective for children in first through third grades when it does not exceed 20 minutes each school day. From fourth through sixth grades, many educators recommend from 20 to 40 minutes a school day for most students. For students in 7th- through 9th-grades, generally, up to 2 hours a school day is suitable. Ninety minutes to 2 hours per night are appropriate for grades 10 through 12. Amounts that vary from these guidelines are fine for some students. (p. 16)

In her

Rethinking

Homework, 2nd Edition: Best Practices That Support Diverse Needs,

Vatterott (2018) provided the following somewhat different homework

guidelines. When assigning homework, teachers should consider the

importance of personal and family time, individual differences, and the need to

inform parents and students of what to do if they encounter challenges in

completing homework.

In her

Rethinking

Homework, 2nd Edition: Best Practices That Support Diverse Needs,

Vatterott (2018) provided the following somewhat different homework

guidelines. When assigning homework, teachers should consider the

importance of personal and family time, individual differences, and the need to

inform parents and students of what to do if they encounter challenges in

completing homework.

Black and Wiliam (1998) provided the following suggestions for improving formative assessment (How can we improve formative assessment? section):

In terms of building self-esteem in pupils, “feedback to any pupil should be about the particular qualities of his or her work, with advice on what he or she can do to improve, and should avoid comparisons with other pupils” (para. 3).

Self-assessment by pupils is an essential component in formative assessment, which involves three components: students must recognize the desired goal, have evidence about their present position, and some understanding of a way to close the gap between the two. “[I]f formative assessment is to be productive, pupils should be trained in self-assessment so that they can understand the main purposes of their learning and thereby grasp what they need to do to achieve” (para. 7).

In terms of effective teaching:

“[O]pportunities for pupils to express their understanding should be designed into any piece of teaching, for this will initiate the interaction through which formative assessment aids learning” (para. 9).

“[T]he dialogue between pupils and a teacher should be thoughtful, reflective, focused to evoke and explore understanding, and conducted so that all pupils have an opportunity to think and to express their ideas” (para. 13).

"[F]eedback on tests, seatwork, and homework should give each pupil guidance on how to improve, and each pupil must be given help and an opportunity to work on the improvement” (para. 15).

Stephen Chappuis and Jan Chappuis (2007/2008) said that a key point on the nature of formative assessment is that "there is no final mark on the paper and no summative grade in the gradebook" (p. 17). The intent of this type of assessment for learning is for students to know where they are going in terms of learning targets they are responsible for mastering, where they are now, and how they can close any gap. "It functions as a global positioning system, offering descriptive information about the work, product, or performance relative to the intended learning goals" (p. 17). Such descriptive feedback identifies specific strengths, then areas where improvement is needed, and suggests specific corrective actions to take. For example, in a study of graphing, an appropriate descriptive feedback statement might be "You have interpreted the bars on this graph correctly, but you need to make sure the marks on the x and y axes are placed at equal intervals" (p. 17). Notice that the statement does not overwhelm the student with more than he/she can act on at one time.

Teachers, however, must help students to understand the role of formative assessment, as in the minds of many learners assessment of any kind "equals test equals grade equals judgment" (Tomlinson, 2014, p. 11). Hence, they easily become discouraged, rather than appreciating that such assessments do not call for perfection. Tomlinson suggested that educators might reinforce this message, telling learners something like the following:

When we're mastering new things, it's important to feel safe making mistakes. Mistakes are how we figure out how to get better at what we are doing. They help us understand our thinking. Therefore, many assessments in this class will not be graded. We'll analyze the assessments so we can make improvements in our work, but they won't go into the grade book. When you've had time to practice, then we'll talk about tests and grades. (p. 11)

Tomlinson (2013/2014) also believes it is important for teachers to inspire students to care and that "Mastery also implies attitudes that characterize success--a work ethic, willingness to think strategically, tolerance for ambiguity, capacity to delay gratification, clarity about what quality looks like, and so on" (p. 88).

Thomas Guskey (2007) pointed out that formative assessments will not necessarily lead to improved student learning or teacher quality without appropriate follow-up corrective activities after the assessments. These activities have three essential characteristics. They present concepts differently, engage students differently in learning, and provide students with successful learning experiences. For example, if a concept was originally taught using a deductive approach, a corrective activity might employ an inductive approach. An initial group activity might be replaced by an individual activity, or vice versa. Corrective activities can be done with the teacher, with a student's friend, or by the student working alone. As learning styles vary, providing several types of such activities to give students some choice will reinforce learning (pp. 29-30).

Guskey (2007) suggested several possible corrective activities, which are included in the following table. He recommended these be done during class time to ensure those who need them the most will take part.

|

How to Use Corrective Activities |

||

| Activity |

Helpful Characteristic |

With Teacher (T), Friend (F), Alone (A) |

| Reteaching | Use different approach; different examples. | T |

| Individual Tutoring |

Tutors can also include older students, teacher aides, classroom volunteers. | T, F |

| Peer Tutoring |

Avoid mismatched students, as this can be counterproductive. | F |

| Cooperative Teams | Teachers group 3-5 students to help one another by pooling knowledge of group members. Teams are heterogeneous and might work together for several units. | F |

| Course Textbooks |

Reread relevant content, which corresponds to problem areas. Provide students with exact sections or examples so they can go directly to it. | T, F, A |

| Alternative Textbooks |

These might offer a different presentation, explanation, or examples. | T, F, A |

| Alternative Materials, Workbooks, Study Guides | Includes videotapes, audiotapes, DVDs, hand-on material, manipulatives, Web resources, and so on. | T, F, A |

| Academic Games |

Can promote learning via cooperation and collaboration. | T, F, A |

| Learning Kits |

Usually include visual presentations and tools, models, manipulatives, interactive multimedia content. Can be commercial or teacher made. | F, A |

| Learning Centers/ Labs | Include hands-on and manipulative tasks. Involve structured activity with specific assignment to complete. | F, A |

| Computer Activities |

Can be effective when students become familiar with how a program works and when software matches learning goals. | F, A |

|

Source: Adapted from Guskey, T. (2007). The rest of the story. Educational Leadership, 65(4), 31. https://www.ascd.org/el/articles/the-rest-of-the-story |

||

According to Guskey (2007), some students will demonstrate mastery of concepts on an initial formative assessment. These students are ideal candidates for enrichment activities while others are engaged in corrective activities. "Rather than being narrowly restricted to the content of specific instructional units, enrichment activities should be broadly construed to cover a wide range of related topics" (p. 32). As with corrective activities, students should have some freedom to choose an activity that interests them. Teachers might consider having students produce a product of some kind summarizing their work. This enhances the experience so that students don't construe the time spent as busy work.

Books

|

Dylan Wiliam (2011) provided over 50 formative assessment techniques for classroom use in Embedded Formative Assessment - practical strategies and tools for K-12 teachers. |

Page Keeley and Cheryl Rose Tobey's (2011) Mathematics Formative Assessment: 75 Practical Strategies for Linking Assessment, Instruction, and Learning is noteworthy. |

|

Robert Marzano (2010) delved into Formative Assessment and Standards-Based Grading for mastery learning. |

Northwest Education Association (2020) Making it work: How formative assessment can supercharge your practice is an e-book. |

Articles

Council of Chief State School Officers. (2018). Revising the definition of formative assessment. https://ccsso.org/resource-library/revising-definition-formative-assessment

Foster, D., & Poppers, A. (2009, November). Using formative assessment to drive learning: The Silicon Valley Mathematics Initiative: A twelve-year research and development project. The Silicon Valley Mathematics Initiative is at https://svmimac.org

Heritage, M. (2008). Learning progressions: Supporting instruction and formative assessment. Washington, DC: Council of Chief School Officers. https://csaa.wested.org/resource/learning-progressions-supporting-instruction-and-formative-assessment/

Heritage, M. (2010). Formative assessment and next-generation assessment systems: Are we losing an opportunity? Washington, DC: Council of Chief School Officers. https://files.eric.ed.gov/fulltext/ED543063.pdf

Popham, W. J. (2009). All about assessment / A process – not a test. Educational Leadership, 66(7), 85-86. https://www.ascd.org/el/articles/a-process-not-a-test

Stiggins, R. J. (2002). Assessment crisis: The absence of assessment FOR learning. Phi Delta Kappan, 83, 758-765. http://www.edtechpolicy.org/CourseInfo/edhd485/AssessmentCrisis.pdf

Unlike formative assessment, which is assessment for learning, "Summative

assessment is the assessment of learning at a particular time point and is meant

to summarize a learner's skills and knowledge at a given point of time.

Summative assessments frequently come in the form of chapter or unit tests,

weekly quizzes, end-of term tests, or diagnostic tests" (California Department

of Education, 2014, p. 85). These assessments provide for accountability. Carnegie Mellon University (2022) noted the difference between

formative and summative assessments, stating that the "goal of summative

assessment is to evaluate student learning at the end of an instructional

unit by comparing it agains some standard or benchmark" and that such

"assessments are often high stakes."

Unlike formative assessment, which is assessment for learning, "Summative

assessment is the assessment of learning at a particular time point and is meant

to summarize a learner's skills and knowledge at a given point of time.

Summative assessments frequently come in the form of chapter or unit tests,

weekly quizzes, end-of term tests, or diagnostic tests" (California Department

of Education, 2014, p. 85). These assessments provide for accountability. Carnegie Mellon University (2022) noted the difference between

formative and summative assessments, stating that the "goal of summative

assessment is to evaluate student learning at the end of an instructional

unit by comparing it agains some standard or benchmark" and that such

"assessments are often high stakes."

Traditional assessments might include multiple choice, true/false, and matching. Alternative assessments take the form of short answer questions, essays/papers, electronic or paper-based portfolios, journal writing, oral presentations, demonstrations, creation of a product or final project, student self-assessment and reflections, and performance tasks that are assessed by predetermined criteria noted within rubrics. Self and peer assessments can be both formative and summative in nature, and help students to take responsibility for and to become critical of their own work.

Assessments related to the Common Core State Standards in Mathematics also include performance assessments, and extended response questions. Christina Brown and Pascale Mevs (2012) noted the following definition of performance assessment:

Quality Performance Assessments are multi-step assignments with clear criteria, expectations and processes that measure how well a student transfers knowledge and applies complex skills to create or refine an original product. (p. 1)

Jay McTighe (2015) presented seven general characteristics of performance tasks, which might be learning activities or assessments. Performance tasks:

In

Overcoming Textbook Fatigue,

Releah Lent (2012) noted that performance-based assessments take many forms. They might

include project exhibits, oral presentations, debates, panel discussions

on open-ended questions, lab experiments, multimedia presentations,

demonstrations of experiments or solutions to problems. Learners

might conduct interviews, create visual displays (e.g., graphs, charts,

posters, illustrations, storyboards, cartoons) or photo essays,

construct models, or contribute to blogs, wikis or other electronic

projects (Lent, 2012, p. 137). [Note: You will find more about

performance tasks in CT4ME's section on curriculum mapping.]

In

Overcoming Textbook Fatigue,

Releah Lent (2012) noted that performance-based assessments take many forms. They might

include project exhibits, oral presentations, debates, panel discussions

on open-ended questions, lab experiments, multimedia presentations,

demonstrations of experiments or solutions to problems. Learners

might conduct interviews, create visual displays (e.g., graphs, charts,

posters, illustrations, storyboards, cartoons) or photo essays,

construct models, or contribute to blogs, wikis or other electronic

projects (Lent, 2012, p. 137). [Note: You will find more about

performance tasks in CT4ME's section on curriculum mapping.]

In Assessment to the Core, a webinar hosted by the School Improvement Network, McTighe (2014) presented a Framework of Assessment Approaches and Methods (slides handout, p. 4), which highlights potential forms of performance assessments in the following table.

|

FRAMEWORK OF ASSESSMENT APPROACHES AND METHODS How might we assess student learning in the classroom? |

|||

|

SELECTED RESPONSE ITEMS multiple choice, true-false, matching |

|||

| PERFORMANCE-BASED ASSESSMENTS | |||

|

Constructed Responses |

Products | Performances | Process- Focused |

| fill in the blank: words, phrases |

essay | oral presentation |

oral questioning |

| short answer: sentences, paragraphs |

research paper | dance/ movement |

observation ("kid watching") |

| label a diagram | blog/ journal | science lab demonstration | interview |

| Tweets | lab report | athletic skills performance | conference |

| "show your work" | story/ play | dramatic reading |

process description |

| representation: e.g., fill in a flowchart or matrix |

concept map* | enactment | "think aloud" |

| portfolio | debate | learning log | |

| illustration | musical recital |

||

| science project** | Prezi/ PowerPoint |

||

| 3-D model | music performance |

||

| iMovie | |||

| podcast | |||

| Source: School Improvement Network. (2014, January).

Assessment

to the Core: Assessments that enhance learning [Webinar

featuring Jay McTighe].

*Note: See How to Make a Concept Map Easily -- With Examples, provided by Shaun Killian (2019) at Evidence-Based Teaching. **McTighe's example was a science project. Consider a math project in the math classroom. |

|||

For learners to do well on assessments involving performance tasks, Marzano and Toth (2014) indicated that teachers need to have a shift in their instructional practice to help learners engage in cognitively complex tasks.

"Engaging in cognitively complex tasks is not merely an end-of-unit or culminating activity. Students must begin to “live” in a land of cognitive complexity. Students who are presented with a complex knowledge utilization task at the end of a unit, for instance, with no questions, tasks or activities built-in along the way that required them to use that level of thinking, will have much more difficulty making meaning of the task. Effective teachers incorporate “short visits” throughout the unit to help build student capacity for complex tasks." (p. 19)

Unfortunately at present, among the 18 instructional strategies they observed teachers using, the frequency of those related to engaging students in cognitively complex tasks involving hypothesis generation and testing, revising knowledge, and organizing students for cognitively complex tasks was just 3.2%. Much instruction is still teacher centered, as the highest frequency (47%) of strategies used was associated with lecture, practice, and review (Marzano & Toth, 2014, p. 14).

In

Engaging the Online Learner: Activities and Resources for Creative Instruction,

Conrad and Donaldson (2004, 2011) characterized an authentic

activity as one that "simulates an actual situation" and "draws on the

previous experiences of the learners" (Conrad & Donaldson, 2004, p. 85). They

posed six questions to guide educators who design such activities:

In

Engaging the Online Learner: Activities and Resources for Creative Instruction,

Conrad and Donaldson (2004, 2011) characterized an authentic

activity as one that "simulates an actual situation" and "draws on the

previous experiences of the learners" (Conrad & Donaldson, 2004, p. 85). They

posed six questions to guide educators who design such activities:

Is the activity authentic?

Does it require learners to work collaboratively and use their experiences as a starting point?

Are learners allowed to learn from their mistakes?

Does the activity have value beyond the learning setting?

Does the activity build skills that can be used beyond the life of the course?

Do learners have a way to implement their outcomes in a meaningful way? (p. 86).

The School of Education at the University of Wisconsin-Stout has extensive collections for creating and using rubrics for assessment and rubrics and assessment resources.

Educators will find a growing collection of performance assessments within the Performance Assessment Resource Bank, a project of the Understanding Language and Stanford Center for Assessment, Learning, and Equity and the Stanford Center for Opportunity Policy in Education in collaboration with the Council of Chief State School Officers Innovation Lab Network (About section). Free K-12 performance tasks are available for math, English/language arts, science, and history/social studies. Filter by type of task, subject, course, grade level.

Finally, as an important reminder about differentiation, Carol Tomlinson and Tonya Moon (2011) stated:

"Summative assessments can be differentiated in terms of complexity of the language of directions, providing varied options for expressing learning, degree of structure vs. independence required for the task, the nature of resource materials, and so on. What cannot be differentiated is the set of criteria that determines success. In other words, with the exception of students whose educational plans indicate otherwise, the KUDs [Know, Understand, Do] established at the outset of a unit remain constant for all students" (p. 5).

Consider having students develop a portfolio or an e-portfolio.

Jon

Mueller (2018) defined a portfolio as "A collection of a student's work

specifically selected to tell a particular story about the student." It

is "not the pile of student work that accumulates over a

semester or year. ... Portfolios typically are created for one of the

following three purposes: to show growth, to showcase current abilities,

and to evaluate cumulative achievement." Mueller provides samples

of work to include in a portfolio related to each purpose. He

elaborated on seven questions that need to be addressed when creating a

portfolio assignment. These relate to purpose, audience, content,

process, management, communication, and evaluation. For example,

"purposes for the portfolio should guide the development of it, [and]

the selection of audiences should shape its construction."

Jon

Mueller (2018) defined a portfolio as "A collection of a student's work

specifically selected to tell a particular story about the student." It

is "not the pile of student work that accumulates over a

semester or year. ... Portfolios typically are created for one of the

following three purposes: to show growth, to showcase current abilities,

and to evaluate cumulative achievement." Mueller provides samples

of work to include in a portfolio related to each purpose. He

elaborated on seven questions that need to be addressed when creating a

portfolio assignment. These relate to purpose, audience, content,

process, management, communication, and evaluation. For example,

"purposes for the portfolio should guide the development of it, [and]

the selection of audiences should shape its construction."

Lent (2012) described a portfolio as "a collection of items that is assembled by students or their teachers to show a range of work in a subject." It can be used for both formative and summative assessments. For a structured portfolio, educators might provide activities and assignments to include. Learners would then create a table of contents (p. 134).

George Lorenzo and John Ittelson (2005) defined an e-portfolio as "a digitized collection of artifacts, including demonstrations, resources, and accomplishments that represent an individual, group, community, organization, or institution. The collection can be comprised of text-based, graphic, or multimedia elements archived on a Web site or on other electronic media" (p. 2).

In Digital-Age Assessment, Harry Tuttle (2007) recommended using e-portfolios as a method to look beyond traditional assessment. "A common e-portfolio format includes a title page; a standards' grid; a space for each individual standard with accompanying artifacts and information on how each artifact addresses the standard; an area for the student's overall reflection on the standard; and a teacher formative feedback section for each standard. Within the e-portfolio, the evidence of student learning may be in diverse formats such as Web pages, e-movies, visuals, audio recordings, and text" (Getting Started section).

Digital Portfolios by Mike Fisher includes numerous resources for implementing portfolios: articles/research, tools, examples, and reflections, and more. It's well-worth an investigation.

See the tutorial on creating an Eportfolio with Google Sites.

Caution: e-Portfolios require a degree of computer literacy to create them. Some learners might prefer a paper-based portfolio to showcase their work.

"Student self-assessment can be defined as the process in which students

gather information about and reflect on their own learning in relation to a

learning goal. This process involves three parts in which students: (1)

recognize and understand the desired learning goal, (2) monitor and evaluate the

quality of their thinking and performance to gather evidence about their current

position in relation to the learning goal, and (3) acquire the understanding,

strategies, and skills to close the gap between their current position and the

desired performance" (Learning Point, 2020).

"Student self-assessment can be defined as the process in which students

gather information about and reflect on their own learning in relation to a

learning goal. This process involves three parts in which students: (1)

recognize and understand the desired learning goal, (2) monitor and evaluate the

quality of their thinking and performance to gather evidence about their current

position in relation to the learning goal, and (3) acquire the understanding,

strategies, and skills to close the gap between their current position and the

desired performance" (Learning Point, 2020).

Reflection is a key component of experiential learning. Per David Kolb's theory of experiential learning, McLeod (2017) noted that for learning to be effective, a person needs to progress through a cycle of four stages, none of which is effective as a learning procedure on its own. Those stages include:

"(1) having a concrete experience followed by (2) observation of and reflection on that experience which leads to (3) the formation of abstract concepts (analysis) and generalizations (conclusions) which are then (4) used to test a hypothesis in future situations, resulting in new experiences." (Experiential Learning section)

Learning how to self-assess is an incremental process that can begin with the elementary grades. "During self-assessment, students reflect on the quality of their work, judge the degree to which it reflects explicitly stated goals or criteria, and revise. Self-assessment is formative...Self-evaluation, in contrast, is summative--it involves students giving themselves a grade" (Andrade, 2007, p. 60).

For self-assessment to be meaningful to students, they must prove to themselves that it can make a difference in their learning. In a differentiated classroom, self-assessment "also enables student and teacher to focus both on nonnegotiable goals for the class and personal or individual goals that are important for the development of each learner" (Tomlinson & McTighe, 2006, p. 80). Alison Preece (1995) provided eight tips for success. Teachers might point out that payoff, start small and keep things simple, build self-[assessment] into day-to-day activities, make it useful, clarify criteria, focus on strengths, encourage variety and integrate self-[assessment] strategies with peer and teacher [assessment], and grant it a high profile (p. 30).

As an example of building self-assessment into day-to-day activities, John Bond (2013) noted three easy-to-implement reflective strategies. Students might complete "I learned" statements. They could also use a think aloud in which they reflect and share what they learned on what had just been taught with a partner. They might complete "Clear and Unclear Windows." In this latter, students divide a sheet of paper into two columns headed by clear and unclear. Then they fill in those columns, respectively, with what they understood about a lesson and did not understand (Bond, 2013).

To ease students into the process of evaluation, Preece (1995) also noted that students might evaluate the materials they use and activities in which they are involved. Teachers might ask for suggestions for improvement of lessons they have presented, peers might comment on work of others by acknowledging what was good and providing a suggestion for a change or addition. Eventually students would "try a variety of strategies such as learning logs, conference records, response journals, self-report sheets, attitude surveys, and portfolio annotations" (p. 33). Teachers might encourage them to come up with questions on "attitudes, strategies, stumbling blocks, and indicators of progress or achievement" (p. 35). Students might write one or two statements of meaningful goals for themselves with some strategies for achieving them. The key for success on this latter is follow-up to monitor progress toward those goals with negotiated check-in times for discussion, including possible refinement or replacement.

To help students develop their personal accountability for learning, Preece (1995) suggested that teachers might require students to keep a record book with books/content read, completed assignments, projects, personal goals, accomplishments and what is working well, challenges to learning, and difficulties encountered. This serves as a basis for conferences, either with the teacher or with parents. In either case, students use this tool and lead those conferences to report their progress on learning.

Rubrics are not just for evaluation (i.e., assigning a grade) of student work. They are excellent tools to use for self- and peer-assessment, orienting students to what constitutes quality from the viewpoint of experts and serving as guides for revision and improvement. They are particularly valuable when students have input into their construction. When they use them to monitor their progress on an assignment, they might underline key phrases in the rubric, perhaps with a colored pencil, and then use that same color to underline or circle those parts in their draft work that meet the standard identified in the rubric. If they can't find where in their work that they have met the standard, they will immediately know that revision is needed (Andrade, 2007). The key to success when using rubrics is to build time for revision into the learning plan.

The design of the rubric is also crucial. Rubrics add the objective component to assessment and evaluation. Caution should be exercised on their use in evaluation. If a typical rubric has five to seven categories, some criteria of value (e.g., originality) to a grader might not be among those. The unique perspective of students and their creativity might be thwarted in self-assessing their own work using only the standards on the rubric. Maja Wilson (2007) pointed out the importance of dialogue as an assessment tool. Where "ideas, expertise, intent and audience matter...a conversation is the only process responsive enough to expose the human mind's complex interactions with language" (p. 80). Dialogue is just as important as using the rubric in assessment, and may lead to changes in the rubric itself as teachers collaborate with their students.

According to Popham (2003), the purposes of classroom tests vary, but prior to constructing any test, teachers should first identify the kinds of instructional decisions that will be made based on test results, and the kinds of score-based inferences needed to support those decisions. Teachers would be most interested in the content validity of their tests and how well their test items represent the curriculum to be assessed, which is essential to make accurate inferences on students' cognitive or affective status. "There should be no obvious content gaps, and the number and weighting of items on a test should be representative of the importance of the content standards being measured" (Chapter 5, All About Inferences section).

Test items can be classified as selected-response (e.g., multiple choice or true-false) or constructed-response (e.g., essay or short-answer). To better foster validity, Salend (2011) recommended matching the testing items to how content was taught. "Essay questions are usually best for assessing content taught through role-plays, simulations, cooperative learning, and problem solving; objective test items (such as multiple choice) tend to be more appropriate for assessing factual knowledge taught through teacher-directed activities" (p. 54). When constructing either type, Popham (2003) offered five pitfalls to avoid, all of which interfere with making accurate inferences of students' status. They are "(1) unclear directions, (2) ambiguous statements, (3) unintentional clues, (4) complex phrasing, and (5) difficult vocabulary (Chapter 5, Roadblocks to Good Item-Writing section). Students would benefit by knowing the differential weighting of questions and time limits in the directions. Ambiguity would be lessened with clearly referenced pronouns when used, and phrases that have singular meanings. Items should be written without obvious clues as to the correct answer. Examples of unintentional clues include the correct answer-option written longer than the incorrect answer-options or grammatical tip-offs (e.g., never, always).

Illustrating

Popham's (2003) pitfalls to avoid, Fisher and Frey (2007) provided an

example showing the difficulty in writing test stems for multiple choice items.

A student looks at a right triangle with legs marked as 5 cm each. The

intention is for the student to find the length of the missing hypotenuse, as

shown. Consider the following stems: "Find X." and "Calculate the

hypotenuse (X) of the right triangle." A middle school student who is at

the beginning stages of learning English might circle the X. He has found

it! However, it is the latter choice that was intended, and is, therefore,

the better unambiguous stem for the question (p. 107).

Illustrating

Popham's (2003) pitfalls to avoid, Fisher and Frey (2007) provided an

example showing the difficulty in writing test stems for multiple choice items.

A student looks at a right triangle with legs marked as 5 cm each. The

intention is for the student to find the length of the missing hypotenuse, as

shown. Consider the following stems: "Find X." and "Calculate the

hypotenuse (X) of the right triangle." A middle school student who is at

the beginning stages of learning English might circle the X. He has found

it! However, it is the latter choice that was intended, and is, therefore,

the better unambiguous stem for the question (p. 107).

Fisher and Frey's (2007) example illustrated the importance of creating tests that are accessible to all learners. Salend (2011) noted four elements to consider: directions, format, readability and legibility, elaborations of which follow.

A rubric reduces grading bias. Popham (2009) indicated that teachers also need to know how to identify and eliminate bias in tests they create, as assessment bias "offends or unfairly penalizes test-takers because of personal characteristics such as race, gender, or socioeconomic status" (p. 9). They "need to know how to create and use rubrics, that is, scoring guides, so students’ performances on constructed-response items can be accurately appraised" and how to develop and possibly score a variety of assessment strategies, such as "performance assessments, portfolio assessments, exhibitions, peer assessments, and self assessments" (p. 9).

HOT Resources:

The Center for Standards, Assessment, and Accountability at WestEd has made available an Assessment Design Toolkit to help preK-12 educators write or select well-designed assessments. The toolkit contains 13 modules divided into four parts: key concepts, the five elements of assessment design (alignment, rigor, precision, bias and scoring), writing and selecting assessments, and reflecting on assessment design. Videos and supplementary materials are included.

Ben Clay of the Kansas Curriculum Center designed and developed Is This a Trick Question? A Short Guide to Writing Effective Test Questions (first printed 2001). This guide with over 60 pages is well-referenced and was developed with funds from the Kansas State Department of Education.

According to Popham (2007b), assessments for the most part should be supplied to teachers, rather than having them create their own. However, "many vendors are not providing the sorts of assessments that educators need" (p. 80). For classroom use, formative diagnostic and interim predictive for upcoming accountability tests are most in demand, as well as "instructionally sensitive accountability tests that can accurately evaluate school quality" (p. 80). Teachers must be able to evaluate a vendor's test to determine if it fulfills the role that it is intended to serve, whether it is formative, predictive, or evaluative. In doing so, Popham suggested that teachers keep the following questions in mind:

Does the test measure a manageable number of instructionally meaningful curricular aims?

Do the descriptive materials accompanying the test clearly communicate the test's assessment targets?

Are there sufficient items on the test that measure each assessed curricular aim to let teachers and students know whether a student has mastered each skill or body of knowledge?

Are the items on the test more likely to assess what a student has been taught in school rather than what that student might have learned elsewhere? (p. 82).

Whether or not a test is teacher-made or vendor-made, the Test Accessibility and Modification Inventory is a valuable evaluation tool for facilitating a comprehensive analysis of tests and test items, including analysis of computer-based tests. It was written by Peter Beddow, Ryan Kettler, and Stephen Elliott (2008) of Vanderbilt University. Analysis considers the passage/item stimulus, the item stem, visuals, answer choices, page/item layout, fairness, and depth of knowledge required to answer a question. Computer-based test analysis also considers the test delivery system, test layout, test-taker training, and audio. This latter is of particular importance as computer-based assessments are being used for the Common Core State Standards in Mathematics.

As vendors are creating tests for online delivery, it is important to note that the types of assessment questions being developed have moved beyond traditional multiple choice, true-false, and fill-in the blank. Per the U.S. Department of Education (2016), technology-based assessments enable expanded question types. Examples include graphic responses in which students might draw, move, arrange, or select graphic regions; hot text within passages where students select or rearrange sentences or phrases; math questions in which students respond by entering an equation; and performance-based assessments in which students perform a series of complex tasks. A math task might ask students to analyze a graph of actual data and determine the linear relationship between quantities, thus testing their cognitive thinking skills and ability to apply their knowledge to solving real-world problems (p. 55).

Are we ready for testing under common core state standards?

Read Patricia Deubel's commentary in THE Journal (2010, September 15). https://thejournal.com/articles/2010/09/15/are-we-ready-for-testing-under-common-core-state-standards.aspx Learn about the rise of online testing and concerns for educators preparing students for Common Core State Standards assessments.

Everyone

makes mistakes. In viewing assessment for learning, "One of

the best ways to encourage students to learn from their mistakes is to allow

them to redo their work" for full credit (Lent, 2012, p. 141). However,

there are some guidelines to consider so that redos do not become a logistic

nightmare, nor used inappropriately just to grade swap. The goal for redos

is to engage learners in deeper learning. Rick Wormeli (2011) elaborated

on this idea in

Redos and Retakes Done Right, which includes 14 Practical Tips for Managing

Redos in the Classroom.

Everyone

makes mistakes. In viewing assessment for learning, "One of

the best ways to encourage students to learn from their mistakes is to allow

them to redo their work" for full credit (Lent, 2012, p. 141). However,

there are some guidelines to consider so that redos do not become a logistic

nightmare, nor used inappropriately just to grade swap. The goal for redos

is to engage learners in deeper learning. Rick Wormeli (2011) elaborated

on this idea in

Redos and Retakes Done Right, which includes 14 Practical Tips for Managing

Redos in the Classroom.

Releah Lent (2012) also provided tips to help educators develop their policy on redos. Key ideas included:

The bottom line, according to Lent (2012), is that "It is time to move from an unrealistic system where students have only one chance to get it right to a system where they understand that redos are not only OK but expected" (p. 141).

The following are tips for allowing test retakes, which history and journalism teacher David Cutler (2019) developed and presents to his high school learners during the first week of a school year. Cutler's policy could be applicable in the math classroom.

Sarah Morris (2024) offered three practical strategies to manage retakes "while respecting professional boundaries and ensuring equitable opportunities for all students."